Insight

Today, rather than diving into specific technologies, I’d like to step back and talk about the more fundamental question: why on-device AI matters.

Minwoo Son

January 7, 2026

Hello! I’m Minwoo Son from ENERZAi’s Business Development team. Through several posts so far, we’ve shared ENERZAi’s full-stack software capabilities for delivering high-performance on-device AI — including Optimium, our proprietary AI compiler that encapsulates our optimization expertise; Nadya, a metaprogramming language that enables efficient optimization; and the extreme low-bit(1.58-bit) speech recognition AI model built on top of them.

Today, rather than diving into specific technologies, I’d like to step back and talk about the more fundamental question: why on-device AI matters.

In our very first meeting with customers to discuss implementing on-device AI, the first question is usually the same: “If we can simply use the latest cloud-based LLM via an API, why would we need to run AI models on the device?” I agree that on-device systems cannot easily match the raw compute power of servers. Still, on-device AI is essential not because of performance, but because of structural reasons.

There are three key points: cost, service reliability, and privacy.

1. Cost: API costs are inherently unpredictable

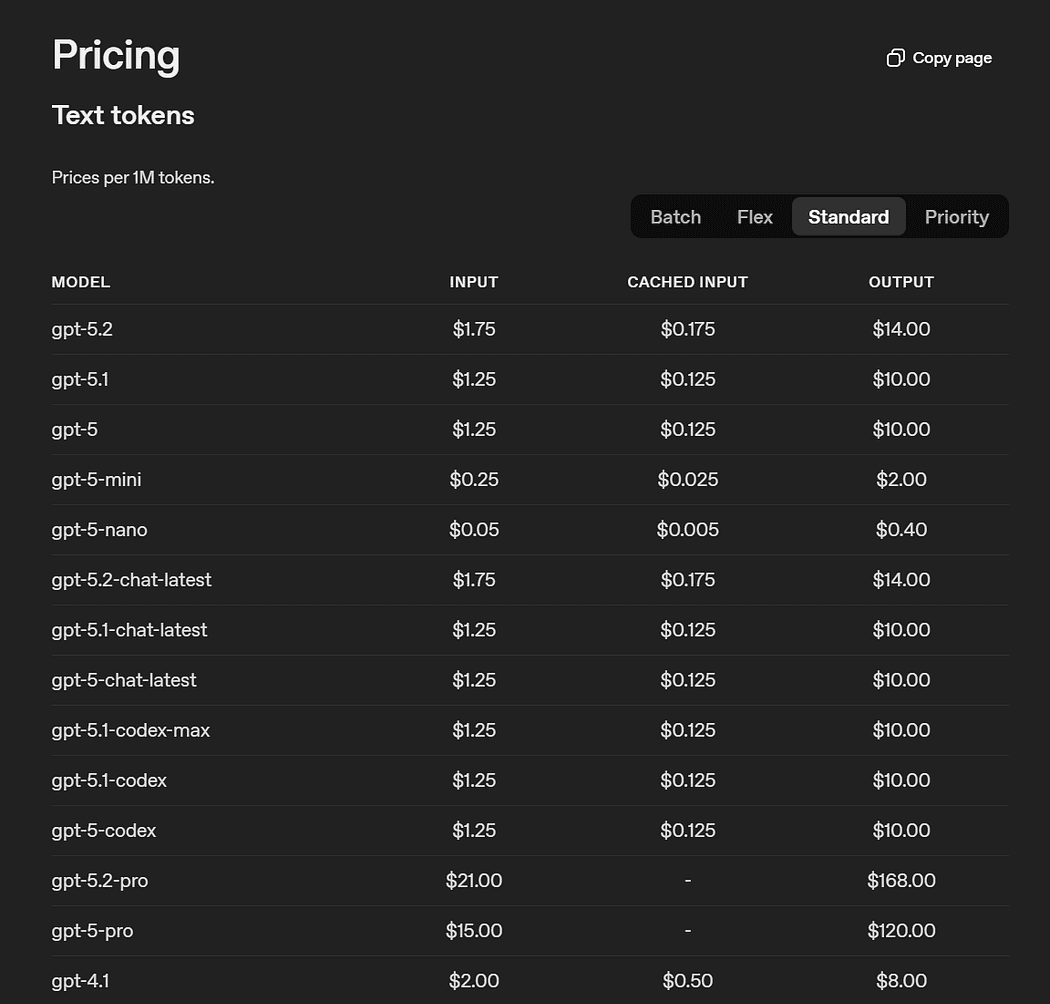

Many of the big-tech AI platforms that power today’s AI services charge based on the amount of data processed. If you look at OpenAI’s LLM API pricing as an example, the cost per one million tokens is clearly listed by model — and they even bill input tokens and output tokens separately.

출처: OpenAI

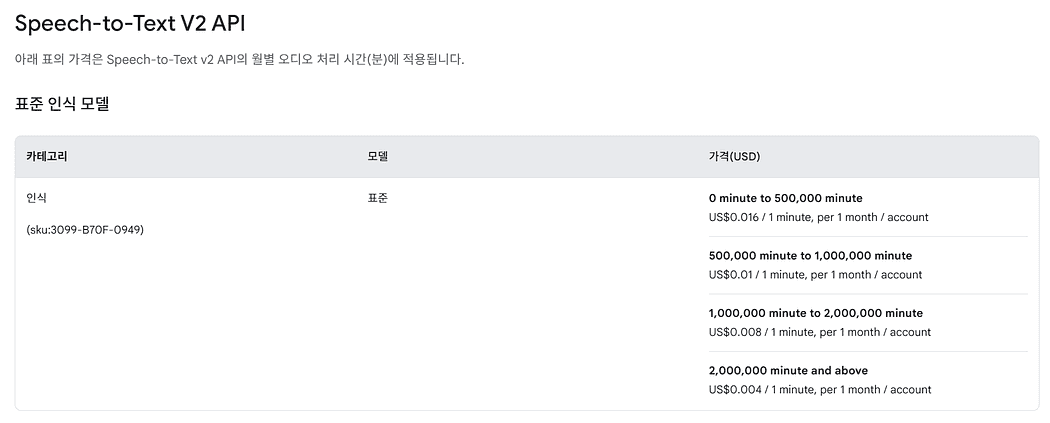

The same applies to Speech-to-Text (STT) model APIs, which are essential for voice AI services that convert speech into text that language models can handle. If you look at Google Cloud’s STT API pricing policy, the price is determined in proportion to the length and volume of the audio input.

출처: Google Cloud

In this structure, cost increases linearly as API usage grows.

Smart home is one of the domains where this billing model becomes especially painful. Users talk to smart-home devices — such as wall pads and set-top boxes — multiple times a day, and as they get used to voice AI, their requests often evolve from simple commands to questions requiring reasoning. With wall pads, most early requests are like “turn on the lights,” but over time, users begin asking context-heavy questions like why the electricity bill increased or why the temperature feels unusual. At that point, not only does the number of API calls increase, but the number of tokens per call tends to rise as well.

A more practical problem is that usage spikes according to daily routines. For set-top boxes, queries concentrate during hours when the whole family is using the device — after work, before bedtime, or on weekends. Conversational requests also tend to chain together once they start. Some households might use voice AI only a few times a day, while others — especially with kids — may repeat requests dozens of times just for fun. From a service provider’s perspective, consumption is nearly impossible to control, and the API budget becomes dependent on unpredictable user behavior.

This is where on-device AI shines. By handling most requests locally and minimizing API calls, you can reduce operating costs — and, importantly, forecast those costs more accurately. The cloud only needs to be invoked when external knowledge or truly complex reasoning is required.

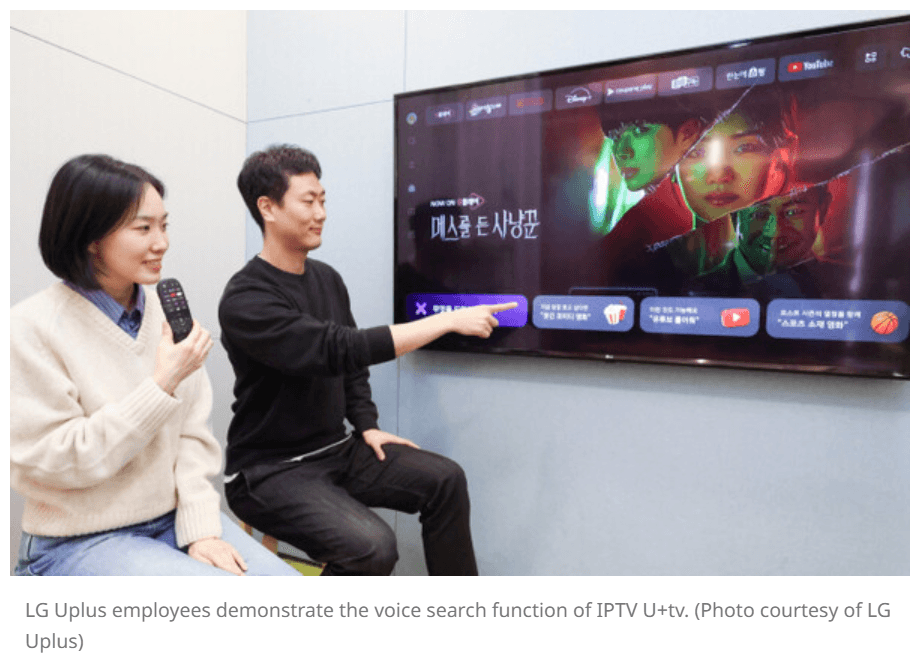

Ultimately, on-device AI is a key strategy for turning cost into something sustainably manageable. ENERZAi has already executed this strategy by developing an efficient on-device voice control AI model and deploying it commercially across two million LG U+ set-top boxes.

2. Service reliability: when the network goes down, AI goes down

Recently, there was a major controversy after a large-scale power outage in San Francisco caused a robotaxi service to be temporarily suspended, with some vehicles reportedly stopping in intersections or blocking roads and creating congestion.

출처: Car and Driver

The implication is clear: when the network becomes unstable, cloud-based AI becomes unstable too.

Cloud-based AI usually works reliably in normal conditions. But services are often judged in the exceptions — when something goes wrong. And networks are less stable than we often assume. In environments like basements or rural areas, connectivity can degrade. While moving, repeated handoffs between cell towers can accumulate latency. At large events, sudden traffic surges are common. Even intermittent delays can quickly degrade the experience of conversational AI.

This becomes even more critical because AI increasingly sits at the center of the service flow. Conversational AI must respond immediately; a 1–2 second delay is often perceived as poor quality. Users care less about how “smart” the model is, and more about whether it reacts right away when they speak. So even with a technically strong model, the product can be perceived as unreliable in real-world environments.

On-device AI acts as a safety net. Even when the network fluctuates, core functions can remain available locally — and when connectivity recovers, the system can switch back to cloud-based inference. In short, on-device AI enables the reliability that high-quality AI services require.

3. Privacy: the moment data leaves the device, it becomes risk

As large-scale personal data breaches continue across industries such as telecom and e-commerce, both companies and users are becoming more cautious about architectures where data leaves the device. Privacy incidents are no longer one-off issues — they are operational risks that directly affect business continuity. When a breach occurs, it doesn’t end with a quick patch. Government investigations follow, legal and compensation costs escalate, and the largest costs often come later in the form of long-term customer churn and erosion of brand trust.

AI makes this harder because the nature of collected data changes. Traditional service data was often structured — click logs, purchase histories, and similar records. But data generated through conversational AI is natural language. Natural language can contain highly sensitive information: intent, habits, emotions, work context, and more. Even without explicit personally identifiable information, re-identification becomes more likely through context. In some cases, the question itself can generate new sensitive information.

In this context, privacy is not just a technical issue — it’s also a trust issue. From a user’s perspective, the key question isn’t how strong the encryption is; it’s simply: “Is my data leaving the device?”

On-device AI can change that structure. If sensitive data is processed on the device and outbound data is minimized to only what is necessary, you reduce the attack surface and limit potential damage. More than anything, being able to say “your data does not leave your device” is one of the most intuitive and powerful trust signals.

Conclusion: on-device AI is not an option — it’s the direction

When you consider cost, service reliability, and privacy together, AI will inevitably move to a far more on-device-centered approach than today. API costs rise linearly with usage, network instability directly impacts service quality, and architectures that send data outward create larger blast radii when incidents happen. All three problems worsen under a cloud-centric model — and all three have meaningful room for improvement with on-device execution.

That said, we are not claiming that all AI can be handled on-device. Tasks that require very large models, external knowledge, or heavy computation — such as multimodal workloads — naturally favor the cloud. What matters is the capability to decide, case by case, which computations should run on-device and which should be delegated to the cloud. This is where the role of a Function Caller agent becomes increasingly important: calling external APIs and fetching necessary data to answer user queries.

Google has also highlighted the importance of such agents, and in December last year, it released FunctionGemma, a Gemma model fine-tuned specifically for function calling.

ENERZAi — one of the companies most sharply focused on on-device AI — is preparing for this future by integrating function calling into the voice AI pipeline, spanning Noise Reduction, Voice Activity Detection, Keyword Spotting, Speech-to-Text, Natural Language Understanding/Large Language Models, and Text-to-Speech.

Below is a demo video showing ENERZAi’s ultra-lightweight Speech-to-Text model, 1.58-bit EZWhisper, and an NLU model for light control running on a Raspberry Pi 5 (ARM Cortex-A76). We’ll soon share a commercial case study video featuring function calling as well — stay tuned!

ENERZAi has hands-on experience optimizing and commercializing the entire voice AI pipeline in on-device environments, based on our proprietary Optimium compiler and Nadya language. At the same time, we’re also building a hybrid AI architecture that efficiently connects on-device and cloud execution through function calling.

If you’re looking to implement on-device AI in your service, or want to co-design an on-device AI architecture tailored to your hardware environment, feel free to reach out anytime. We’ll propose the most practical and deployable path forward.